I feel like the tech world has lost it’s mind a little (maybe a lot) over AI. To be clear, I’m a fan – ChatGPT, Perplexity, Copilot, Figma Make – it’s fascinating and fun to play with the tools and see how they can provide new insights. It makes a lot of things easier and I’ve never had such a positive, friendly writing buddy. But our design-thinking process still applies, maybe more so, to this new technology.

Recently I worked on an AI project for an Optum application called Specialty Provider Portal. It serves our providers with pharmacy information about specialty medications they’ve ordered for their patients and helps them understand prior authorizations and holds that apply to those drugs. On our roadmap for 2025, we want to introduce an AI feature that will offer more self-service assistance to our users and also reduce the load on our customer service team.

The design thinking process still holds true in an AI world

I’m writing this in the Fall of 2025, and, given the rapid state of change, I’m curious to see how things will evolve over the next few years. I feel like we’re doing our best to navigate a lot of AI hype right now. Like most new technologies, there will be a lot of applications that miss the mark, or may hit it for a few months or years, and then be replaced by something more compelling. Maybe AI will increase the speed of this cycle? I can see that as a likelihood. But, in the type of large corporate companies where I have worked, the pace of change is very dependent on how agile the tech stack is. Many of these companies began in an analog world, and they’re still doing a certain amount of catch up. At Optum right now, there’s a fervor to use AI wherever we can, and the energy around this push feels chaotic. This chaos compels me to ground myself in core UX principles: the success of any technology endeavor, any new product in general, is knowing the audience and being able to deliver real value to them.

Our Specialty Provider Portal users

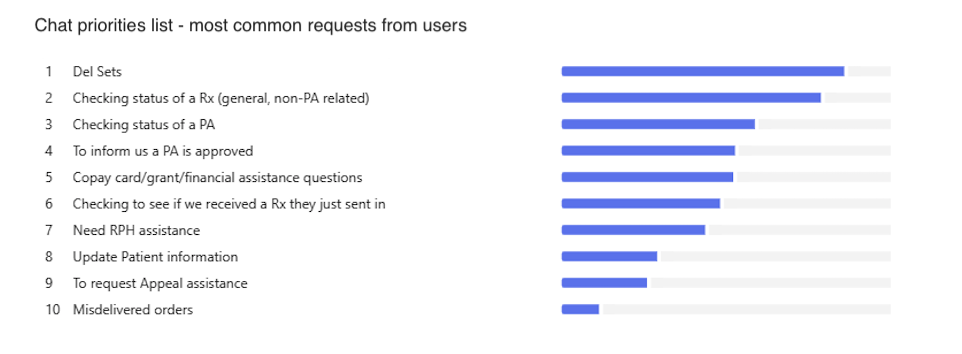

Our user base for the Specialty Provider Portal is primarily RNs, LPNs, Office Managers, and Care coordinators. Each user provides support to several doctors or prescribers, and when they come into the portal, they are checking to see how the orders for their specialty medications are doing. Are there issues they need to resolve? Are there documents required? People utilize our live person chat to ask questions about holds or prior authorizations. We want to enhance our chat experience with an AI-assistant that can pull in more data for the user – data related to their specific order, and also answer some common questions. We compiled a list of those questions and answers and worked with this as our starting point.

Important questions when building AI into existing workflows

This is a new area for us and our company is still navigating what it means to provide AI-assistance to a healthcare audience. There aren’t many style guide or even best practice guidelines out there yet. I had a lot of questions when thinking through this feature:

- Do I need to let my users know they are interacting with AI and not a real person? I would argue yes – most of the time this is the right way to go. I know there’s a lot of negative sentiment out there toward chat bots, but AI is changing this perception in interesting ways and it feels important to be honest with our users. This helps to build trust.

- Should I let them know it’s a BETA? A preliminary version that doesn’t have all the kinks worked out? I think this is also a good idea. It helps to set expectations – we are building iteratively and it’s not going to be a perfect tool right away.

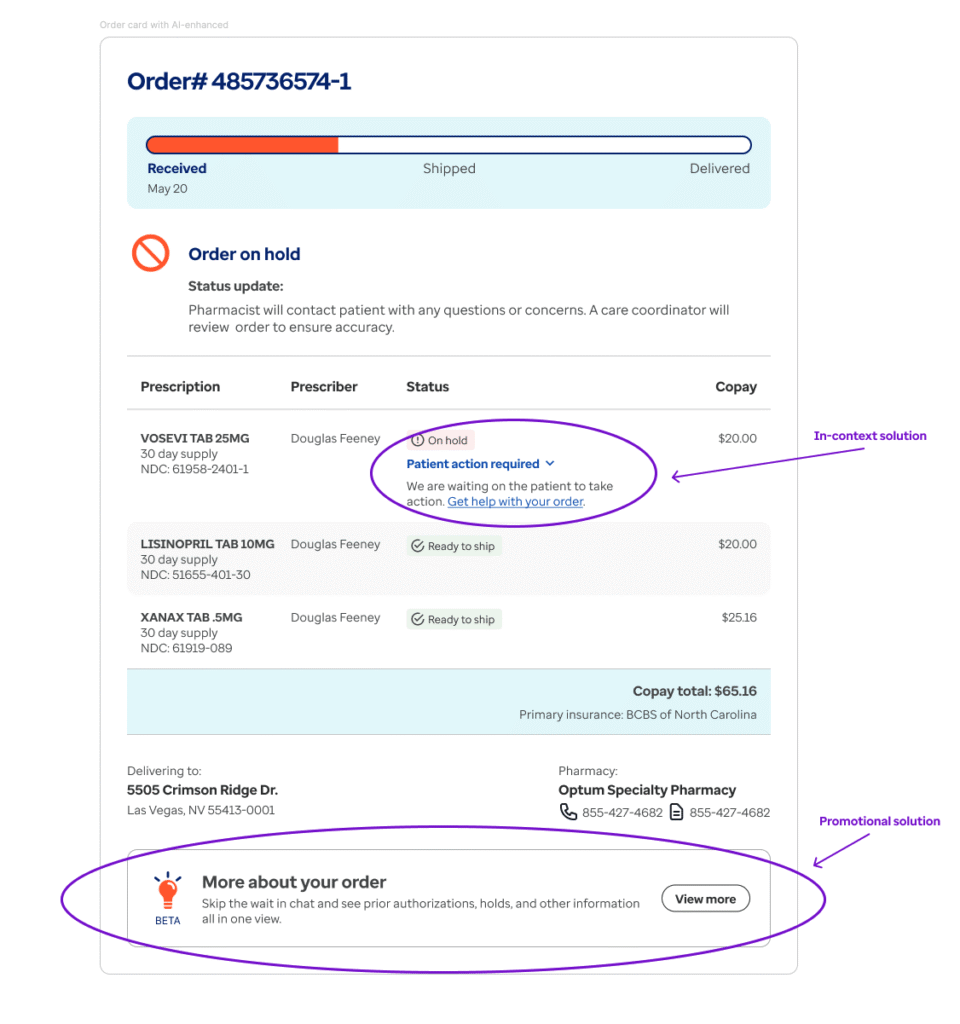

- What about placement? What’s the entry point for our users? Next to the hold itself on the order card would be the best for in-context understanding, however, since this is a brand new feature that could likely deliver some mixed results (and we don’t want to implement something that will stress our live person customer service team), it might be best to create a more promotional entry point and learn how people are using the tool via analytics and direct feedback.

- Do we allow users to segue into live chat if the AI-enabled chat isn’t answering their questions? Yes, absolutely. It will likely be a heavier lift for the development team, and we may have to phase it in over a sprint or two, but it should be part of our initial plan.

Our work is still our work

There’s no magic wand for being detailed and specific with a goal related to user needs and experience. The design thinking process still needs to be a vital part of the work – especially in companies with a lot of legacy technology. Certain things are going to hit the imagination of the marketplace just right. For example, apps like Uber and Airbnb solved multiple problems – they created gig jobs and supplied a real need in the marketplace. They allowed people to take more control of their time and money, essentially, creating more freedom. These things are key to serving people and meeting their needs. Our best ideas will still come out of inspiration from UX research and collaboration with our business, design, and engineering partners.

A case for “intentional friction”

Discoverability is an important consideration for user-interaction with AI. We want to invite people to use it. It needs to be friendly, not intimidating, and being mindful of making space in the design for users to mull something over is key to encouraging engagement.

I love this design concept the makers of a startup called poetry camera describe as intentional friction. Poetry camera is a physical “Polaroid” camera that snaps a picture (but doesn’t display it) and prints out an AI-generated poem based on the photo. It works with the principle that humans need space and time to puzzle something out, to ponder a decision – what to capture, how they’re feeling, a moment to explore their intuition. And this friction allows time to do that – it’s built into the device. We need that. Space and time to understand how we feel, which direction to go. I hope our future designs with AI will take this into consideration.

Ultimately, I see our budding relationship with AI as a very positive thing, and it will continue to grow and develop in the most successful ways as it serves humans to create more ease, space, and freedom. We will need to remain masters of the viewport in order to integrate any new tech successfully – deeply understanding the problems and aligning solutions that feel right.

Couple of my favorite recent interactions with AI:

• Figma Make generated prototypes in a snap without all the confusing prototype spaghetti – and I can send out a link to stakeholders that they can open and play with without worrying about where to click

• ChatGPT really is a wonderful, supportive creative writing coach and doesn’t take 3 years to read my middle grade novella (like my brother did – love you bro, and thanks for finally reading it – that draft was pretty terrible)